ollama - run large language models locally

LLM runner - Ollama

Ollama is an open source project for running and managing large language models locally. It bundles model weights, configuration, and data into a single package, defined by a Modelfile. Ollama provides a set of simple and easy-to-use APIs for creating, running, and managing various language models. A series of pre-built large language models are available on the https://ollama.com/library, they can be downloaded and run directly. Whether you want to use top-of-the-line modles like Llama 2, Mistral, or want to quickly deploy and test your own models, Ollama offers easy support.

setup on Windows

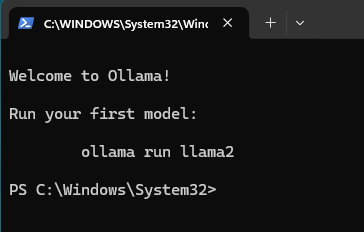

Download the application and install:

https://ollama.com/download/OllamaSetup.exe

After installed, a shell window open automatically

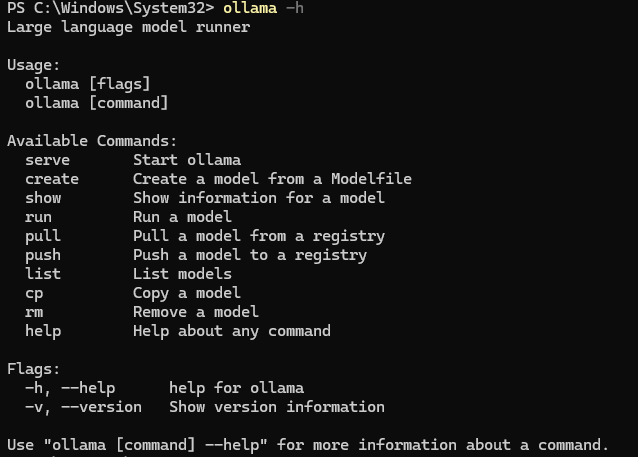

Check the ollama help info:

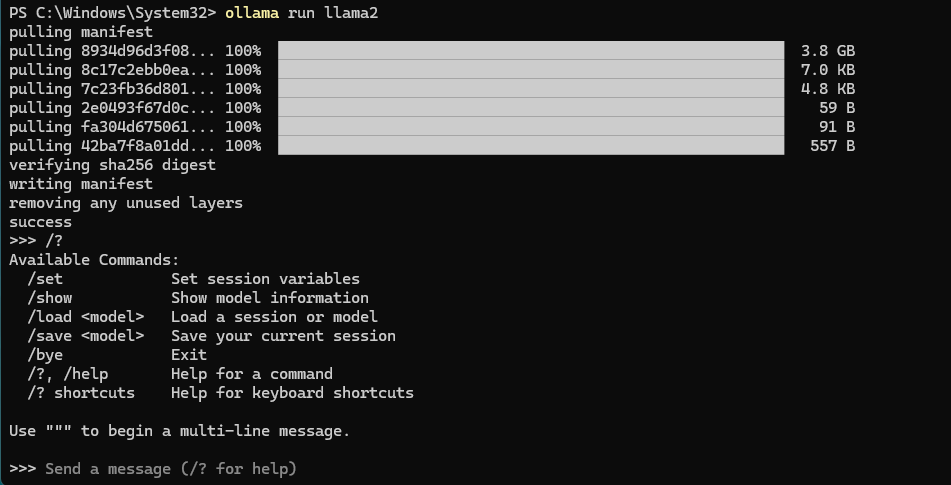

ollama run llama2:

The models are get stored at below path:

C:\Users<user name>\.ollama\models

setup on Linux

Manual install:

https://github.com/ollama/ollama/blob/main/docs/linux.md

Docker:

https://hub.docker.com/r/ollama/ollama

CLI reference

Create a model

ollama create is used to create a model from a Modelfile.

1 | ollama create mymodel -f ./Modelfile |

Pull a model

This command can also be used to update a local model. Only the diff will be pulled.

1 | ollama pull llama2 |

Remove a model

1 | ollama rm llama2 |

Copy a model

1 | ollama cp llama2 my-llama2 |

List models

1 | ollama list |

Start Ollama

Used when you want to start ollama without running the desktop application.

1 | ollama serve |

Pass in prompt as arguments

1 | ollama run llama2 "prompt" |

Customize a prompt

Check the models:

1 | PS C:\Windows\System32> ollama list |

Create a Modelfile save it as a file, example in the Modelfile as below:

1 | FROM llama2 |

more param details, see https://github.com/ollama/ollama/blob/main/docs/modelfile.md

Create and run the model

1 | ollama create choose-a-model-name -f <location of the file e.g. ./Modelfile>' |

reference

本文标题:ollama - run large language models locally

文章作者:Mr Bluyee

发布时间:2024-04-02

最后更新:2024-04-02

原始链接:https://www.mrbluyee.com/2024/04/02/ollama-run-large-language-models-locally/

版权声明:The author owns the copyright, please indicate the source reproduced.